At CEATEC 2024, we visited the JVCKENWOOD booth, which featured three areas themed around “Connecting people, time, and space to create the future.” This aligns with the company’s philosophy of “bringing excitement and peace of mind to people around the world” through cutting-edge technology to enrich lives. JVCKENWOOD showcased innovative video, audio, and wireless solutions designed to create new value for the future. The first area, labeled by the company “Impression – Entertainment Area,” * featured tech demonstrations that engaged all five senses—vision, hearing, touch, smell, and taste—providing a deeply immersive, multi-sensory experience.

JVCKENWOOD booth 3D rendering for CEATEC 2024 – image courtesy of JVCKENWOOD

AI Creates Music and Video Based On Your Subconscious Emotional Response

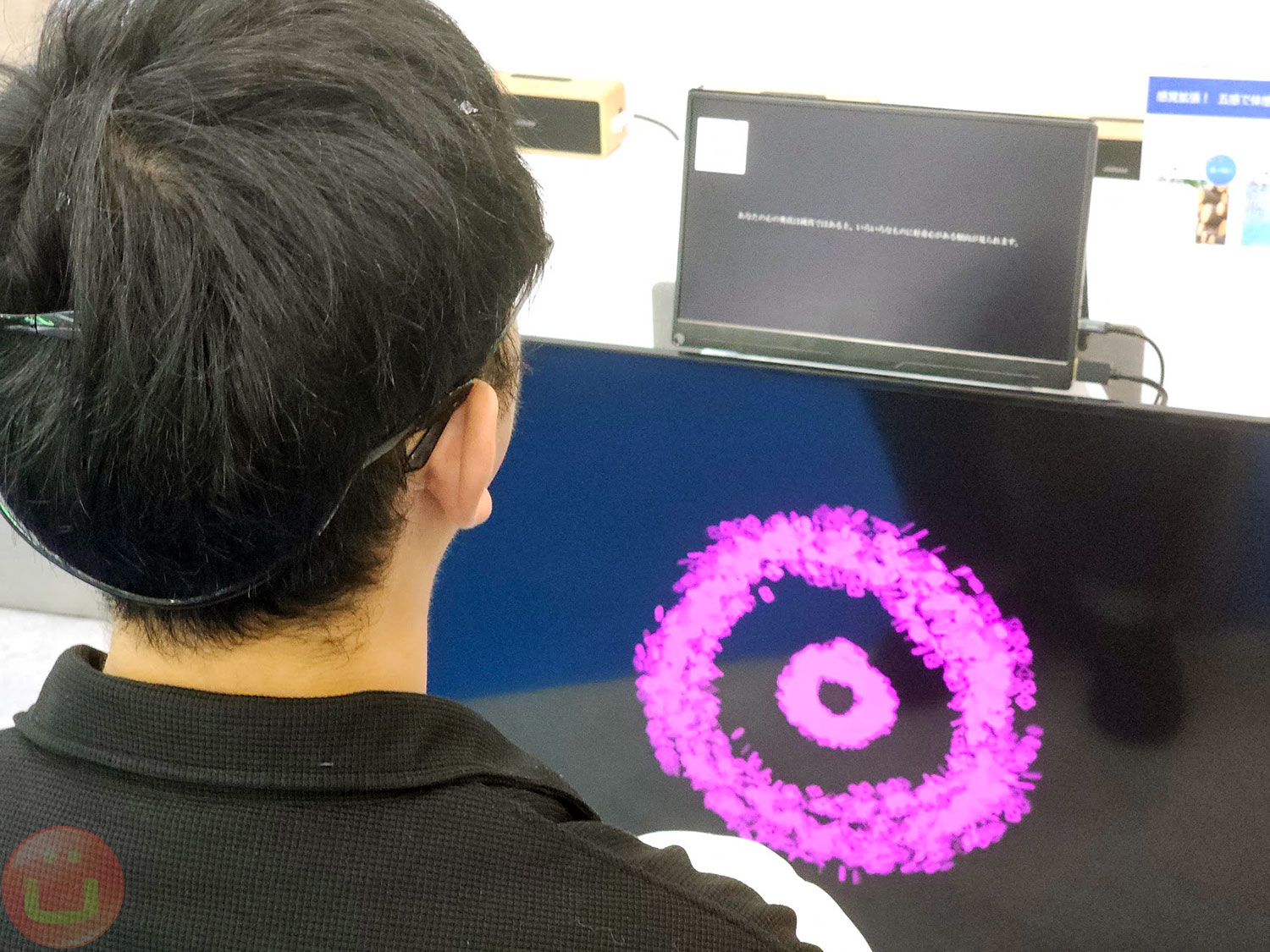

At the JVCKENWOOD booth’s “Impression Entertainment Area,”* we experienced an innovative demo combining brainwave detection, human senses, and AI to create personalized music and video. Participants are exposed to stimuli, such as pre-prepared videos, designed to evoke emotional responses. A brainwave headset then detects the emotions generated, and AI uses this data to compose music and project corresponding images in real-time. The result, described as “the music and video you created,” is played back. The AI further classifies the participant’s subconscious reactions, automatically generating music and visuals that reflect their emotional state, offering a unique interactive experience.

How Does The Technology Behind the Emotion-driven AI-Generated Music And Video Creation Work?

Suguru Goto, Associate Professor in the Department of Musical Creativity and the Environment at Tokyo University of the Arts, presented a demo developed by a team of researchers with the support of JVCKENWOOD. Suguru Goto, who is also a composer and a media artist, showcased the innovative use of brain-computer interface technology for art creation at the JVCKENWOOD booth.

How Did The Demo Work?

The team used an Emotiv Insight EEG headset designed to help researchers and engineers develop innovative solutions for brain-computer interfaces in various fields. Having personally tested several Emotiv products in their early days, I can vouch for the robustness of their brainwave-enabled hardware tools, which have become trusted solutions in neuroscience-based interface development.

When we arrived at the demo, the headset was already calibrated to the participant’s brainwaves. According to Suguru Goto, the system requires just a few minutes to calibrate for each new user. The process began with a brief meditation session, followed by the participant viewing several pre-prepared videos designed to trigger specific emotional responses. The Emotiv Insight headset recorded the brainwaves during these activities while AI-driven software interpreted the data.

Suguru Goto explained that brainwaves are analyzed using machine learning algorithms, which categorize the data into 28 emotional states. These states are organized on a Cartesian plane, with the X-axis (horizontal) ranging from discomfort (unpleasure) to pleasure, and the Y-axis (vertical) ranging from alertness (arousing) to sleepiness.He also showed me a tablet displaying a graphic representation of how the software further divides these 28 emotional states into 64 subdivisions, providing a more detailed view of the participant’s emotional responses.

28 emotional states, subdivided into 64 divisions, are organized on a Cartesian plane, with the X-axis (horizontal) ranging from discomfort (unpleasure) to pleasure and the Y-axis (vertical) ranging from alertness (arousing) to sleepiness.

After the participant completed the video sessions, the system automatically generated music and video based on his emotional responses. This demonstration highlighted how brainwave data, combined with artificial intelligence, can create subconscious-based personalized audio-visual content, offering a glimpse into the potential of brain-computer interface technologies in creative fields.

For the demo at CEATEC, the sound was played through JVCKENWOOD wooden speakers, and the AI-generated video was displayed on a large screen.

The artistic concept of the project, shared with me in a private video, involves showing emotion-triggering videos on a monitor facing the participant, while the AI-generated visuals are projected onto the floor in a dark room where the person is seated.

Beyond AI-based Art: Unlock Limitless Possibilities For Human Wellness

Even though this is an impressive artistic research project, Associate Professor Suguru Goto indicated that it offers significant potential for health-related applications, particularly mental health care.

I know that Emotiv’s primary goal is to provide high-quality EEG-based hardware and software tools to enable researchers to develop solutions that improve cognitive and mental health. This includes creating innovative approaches to music neurofeedback therapy.

JVCKENWOOD Wooden-made SP-WS02BT speakers – the audio playback was great

In addition to video stimuli, other sensory inputs—such as sound, taste, and smell—could also trigger emotional responses in participants. For example, the participant could hold a JVCKENWOOD “Hug Speaker” (see photo), which vibrates in sync with the sound, or enjoy the scent of the wood from speakers crafted from various types of natural wood. These stimuli could complement the AI-generated video and music created from brainwave data.

“Hug Speaker” – photo courtesy of JVCKENWOOD

Although we didn’t experience the demo for other sensory stimuli at the booth, I am confident they would work seamlessly with the same demo setup and AI-driven software, creating images and music based on the participant’s subconscious emotional response to this sensory stimulation.

* Editor’s note: “Impression – Entertainment Area” is an English translation by Google based on JVCKENWOOD Japanese Press Release

Filed in . Read more about AI (Artificial Intelligence), Brainwave, CEATEC, Ceatec 2024, Eeg, Emotiv and Japan.